Your How does spark work images are available. How does spark work are a topic that is being searched for and liked by netizens today. You can Find and Download the How does spark work files here. Download all royalty-free vectors.

If you’re searching for how does spark work images information linked to the how does spark work topic, you have visit the ideal site. Our site always gives you hints for seeking the maximum quality video and picture content, please kindly surf and locate more enlightening video content and graphics that fit your interests.

How Does Spark Work. Spark Page for creating pretty and engaging sites from scratch. Ad Learn How Apache Spark and Delta Lake Unify All Your Data on One Platform for BI and ML. Heres a related question that explains how sctextFile delegates through to a Hadoop TextInputFormat. Spark can then run built-in Spark operations like joins filters and aggregations on the data if its able to read the data.

Pin By Saravana Shanmugam On Data Apache Spark Pattern Design Pattern From pinterest.com

Pin By Saravana Shanmugam On Data Apache Spark Pattern Design Pattern From pinterest.com

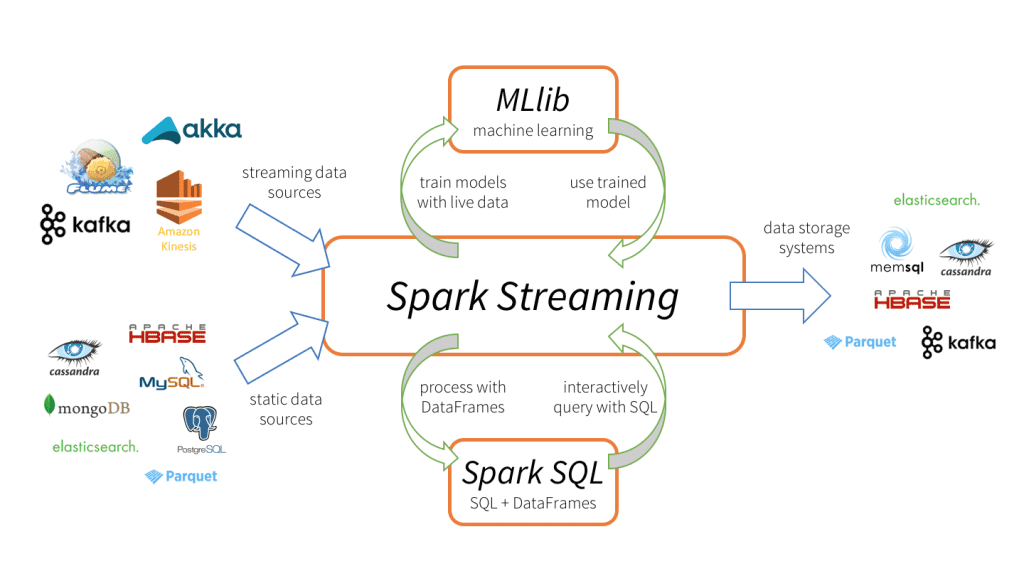

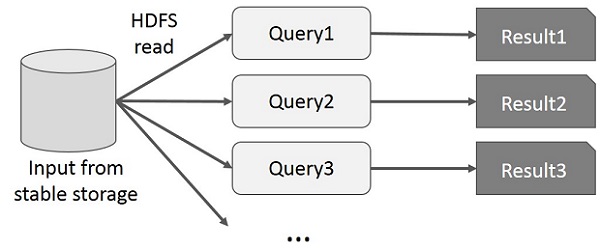

At a high level all Spark programs follow the. Main method invokes sparkContextstop. The piston then goes back up toward the spark plug compressing the mixture. It also supports a rich set of higher-level tools including Spark SQL for SQL and structured data processing MLlib for machine. Spark can then run built-in Spark operations like joins filters and aggregations on the data if its able to read the data. Ad Learn How Apache Spark and Delta Lake Unify All Your Data on One Platform for BI and ML.

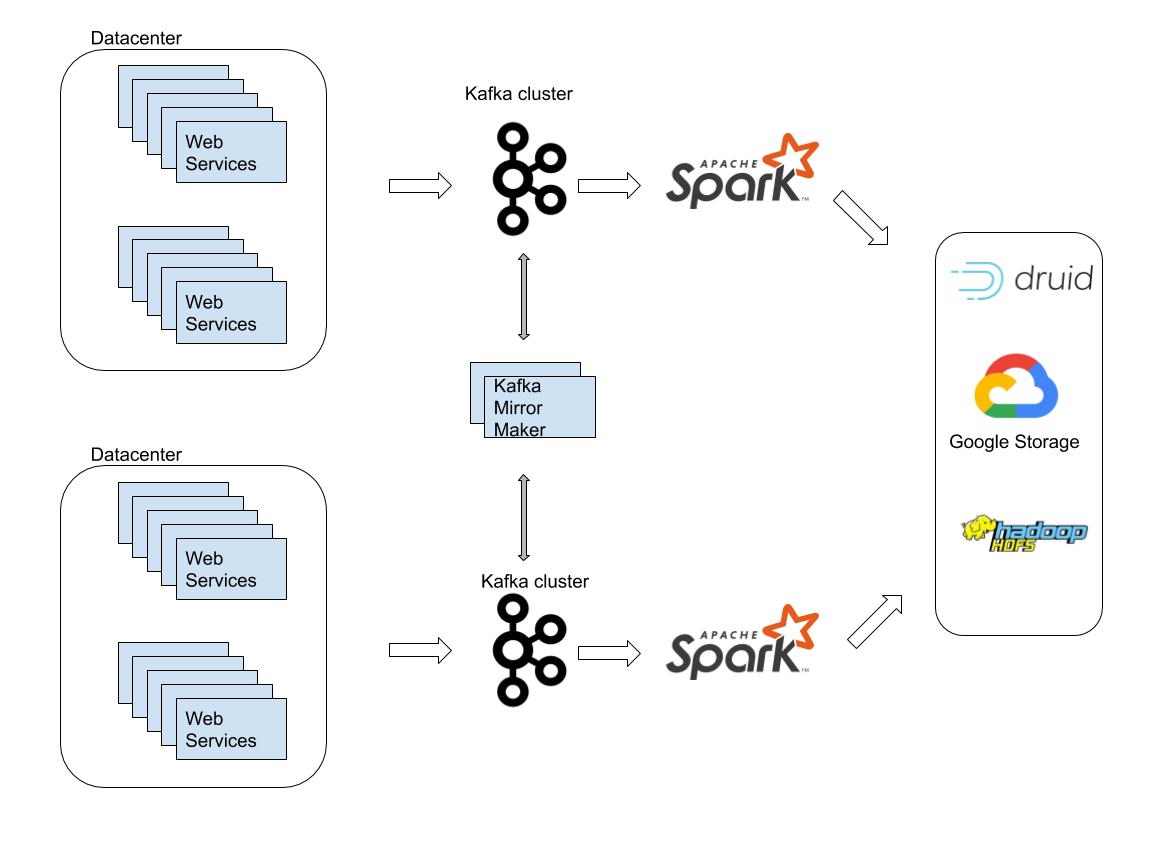

The Spark driver is responsible for converting a user program into units of physical execution called tasks.

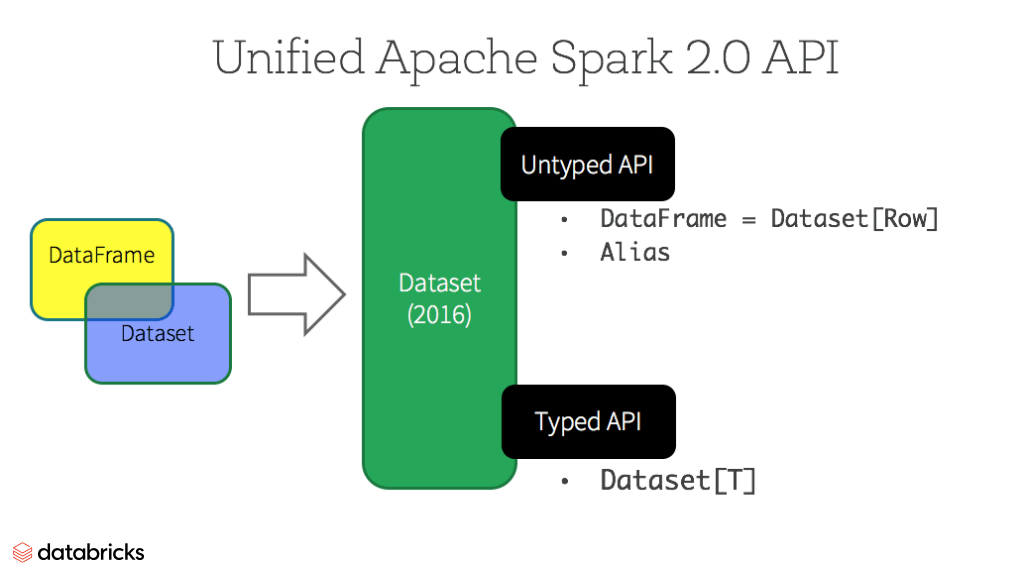

SQLContext is a category and is used for initializing the functionalities of Spark SQL. The fuel and air are mixed forming a highly combustible relationship and injected into the cylinders where the ignition coil releases the appropriate voltage to create a spark from the plug which ignites a small explosion in the spark channel to power the engine. The spark plug sits at the top of the cylinder head. However in simple cases you most probably wont need to use this functionality. Everything can be done and managed via the app accepting orders obtaining directions etc. This design app is really helpful for creating visual stories and allows any user from a novice to someone highly competent to achieve nice results.

Source: tutorialspoint.com

Source: tutorialspoint.com

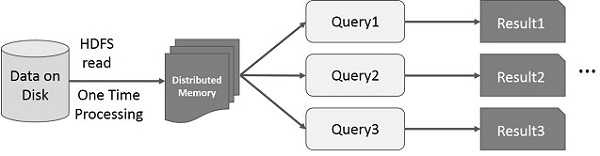

SparkContext class object sc is required for initializing SQLContext class object. SparkContext class object sc is required for initializing SQLContext class object. The Spark driver is responsible for converting a user program into units of physical execution called tasks. Apache Spark is an open source cluster computing system designed and developed for fast processing and analyzing massive data. A Spark application runs as independent processes coordinated by the SparkSession object in the driver program.

Source: pinterest.com

Source: pinterest.com

Download the Free eBook to Learn How Apache Spark and Delta Lake Unify All Your Data. There are methods at least in Hadoop to find out on which nodes parts of the file can be located at the moment. Download the Free eBook to Learn How Apache Spark and Delta Lake Unify All Your Data. SparkContext class object sc is required for initializing SQLContext class object. It is a pluggable component in Spark.

Source: pinterest.com

Source: pinterest.com

Task runs on Executor and each Task upon completion returns the result to the Driver. If you are wondering how to use Adobe Spark there are three different modes. Download the Free eBook to Learn How Apache Spark and Delta Lake Unify All Your Data. By default the SparkContext object is initialized with the identify sc when the spark-shell beginsUse the next command to create SQLContext. As such Page is better suited starting in Grades 4 or 5.

Source: pinterest.com

Source: pinterest.com

The resource or cluster manager assigns tasks to workers one task per partition. Basically this is what happens. Spark distributes the data in its workers memory. However for you ie. Ad Learn How Apache Spark and Delta Lake Unify All Your Data on One Platform for BI and ML.

Source: pinterest.com

Source: pinterest.com

We look at the main components of a spark plug how the spark plug functions explain the concept of spa. A task applies its unit of work to the dataset in its partition and outputs a new partition dataset. Task runs on Executor and each Task upon completion returns the result to the Driver. SQLContext is a category and is used for initializing the functionalities of Spark SQL. Apache Spark is a unified analytics engine for large-scale data processing.

Source: pinterest.com

Source: pinterest.com

Spark erosion is conducted through metal disintegration machines EDM. The spark plug is a seemingly simple device although it is tasked with a couple of different but critical jobs. The piston then goes back up toward the spark plug compressing the mixture. Download the Free eBook to Learn How Apache Spark and Delta Lake Unify All Your Data. Once the Physical Plan is generated Spark allocates the Tasks to the Executors.

Source: pinterest.com

Source: pinterest.com

First and foremost it creates literally an artificial bolt of lightning within the combustion chamber cylinder head of the engine. The piston then goes back up toward the spark plug compressing the mixture. Spark plugs also transfer heat away from the combustion chamber. Heres a related question that explains how sctextFile delegates through to a Hadoop TextInputFormat. Everything can be done and managed via the app accepting orders obtaining directions etc.

Source: pinterest.com

Source: pinterest.com

Apache Spark is a unified analytics engine for large-scale data processing. It provides high-level APIs in Java Scala Python and R and an optimized engine that supports general execution graphs. Once the Physical Plan is generated Spark allocates the Tasks to the Executors. The resources used by a Spark application can. Everything can be done and managed via the app accepting orders obtaining directions etc.

Source: pinterest.com

Source: pinterest.com

Ad Learn How Apache Spark and Delta Lake Unify All Your Data on One Platform for BI and ML. Finally when all Task is completed the main method running in the Driver exits ie. Spark plugs also transfer heat away from the combustion chamber. The resources used by a Spark application can. A Spark application runs as independent processes coordinated by the SparkSession object in the driver program.

Source: pinterest.com

Source: pinterest.com

A task applies its unit of work to the dataset in its partition and outputs a new partition dataset. Finally when all Task is completed the main method running in the Driver exits ie. A task applies its unit of work to the dataset in its partition and outputs a new partition dataset. When customers place an order order offers become visible to available drivers who earn money by picking up and delivering them. Apache Spark is an open source cluster computing system designed and developed for fast processing and analyzing massive data.

Source: pinterest.com

Source: pinterest.com

SQLContext is a category and is used for initializing the functionalities of Spark SQL. As little or no typing is needed younger children even preschoolers find Spark Video easy and accessible. Basically this is what happens. Apache Spark is an open source cluster computing system designed and developed for fast processing and analyzing massive data. Main method invokes sparkContextstop.

Source: databricks.com

Source: databricks.com

Task runs on Executor and each Task upon completion returns the result to the Driver. The piston first travels down the cylinder drawing in a mixture of fuel and air. This process occurs at a rapid rate typically thousands of times per minute and the spark plug is the backbone. Spark distributes the data in its workers memory. Ad Learn How Apache Spark and Delta Lake Unify All Your Data on One Platform for BI and ML.

Source: outbrain.com

Source: outbrain.com

Spark Pages are primarily used to share written stories and so typing and spelling is required. How do Spark Plugs Work - Dummies Video Guide. Heres a related question that explains how sctextFile delegates through to a Hadoop TextInputFormat. The resource or cluster manager assigns tasks to workers one task per partition. The piston first travels down the cylinder drawing in a mixture of fuel and air.

Source: in.pinterest.com

Source: in.pinterest.com

We look at the main components of a spark plug how the spark plug functions explain the concept of spa. We look at the main components of a spark plug how the spark plug functions explain the concept of spa. Spark can then run built-in Spark operations like joins filters and aggregations on the data if its able to read the data. Download the Free eBook to Learn How Apache Spark and Delta Lake Unify All Your Data. Spark distributes the data in its workers memory.

Source: databricks.com

Source: databricks.com

A TextInputFormat does a listStatus to get a complete listing of files in a directory with their corresponding sizes and then uses various split-sizing configuration settings to chop it up into an array of so-called splits which are just filename plus a byte-range. SparkContext class object sc is required for initializing SQLContext class object. A Spark application runs as independent processes coordinated by the SparkSession object in the driver program. It provides high-level APIs in Java Scala Python and R and an optimized engine that supports general execution graphs. Spark erosion is conducted through metal disintegration machines EDM.

Source: tutorialspoint.com

Source: tutorialspoint.com

As little or no typing is needed younger children even preschoolers find Spark Video easy and accessible. Heres a related question that explains how sctextFile delegates through to a Hadoop TextInputFormat. This 3D animated video shows how a spark plug works. It is a pluggable component in Spark. Once the Physical Plan is generated Spark allocates the Tasks to the Executors.

Source: pinterest.com

Source: pinterest.com

However for you ie. However in simple cases you most probably wont need to use this functionality. The Spark driver is responsible for converting a user program into units of physical execution called tasks. Alternatively the scheduling can also be done in Round Robin fashion. If playback doesnt begin shortly try restarting your device.

Source: pinterest.com

Source: pinterest.com

However in simple cases you most probably wont need to use this functionality. It can process the data from different data repositories including NoSQL databases Hadoop Distributed File. Spark distributes the data in its workers memory. SparkContext class object sc is required for initializing SQLContext class object. The Spark driver is responsible for converting a user program into units of physical execution called tasks.

This site is an open community for users to do sharing their favorite wallpapers on the internet, all images or pictures in this website are for personal wallpaper use only, it is stricly prohibited to use this wallpaper for commercial purposes, if you are the author and find this image is shared without your permission, please kindly raise a DMCA report to Us.

If you find this site serviceableness, please support us by sharing this posts to your own social media accounts like Facebook, Instagram and so on or you can also save this blog page with the title how does spark work by using Ctrl + D for devices a laptop with a Windows operating system or Command + D for laptops with an Apple operating system. If you use a smartphone, you can also use the drawer menu of the browser you are using. Whether it’s a Windows, Mac, iOS or Android operating system, you will still be able to bookmark this website.